Running TechEmpower Web Framework Benchmarks on AWS on my own

TL;DR)

- I ran TechEmpower Web Framework Benchmarks on AWS by myself

- By doing that, I got equipped with good tools and skills about benchmarking and performance analysis

Overview

TechEmpower Web Framework Benchmarks are a collection of simple benchmarking results with wrk, and the code base to run the benchmark results. The benchmarking scenarios are simple, but they cover a comprehensive set of web frameworks and libraries.

This is a performance comparison of many web application frameworks executing fundamental tasks such as JSON serialization, database access, and server-side template composition.

Since its launch in 2013, a large audience in the web industry showed their interest to the effort, so that TechEmpower already published 17 rounds of benchmarking results, and they got over 3,800 GitHub stars as of Jan 2019.

For me, what is more interesting than the results themselves is the code base they made open on GitHub, with decent documentation as well as the official website. Given the information available there, they made it very practical for any of us to run the same benchmark, so why not I do it myself!

What did I do?

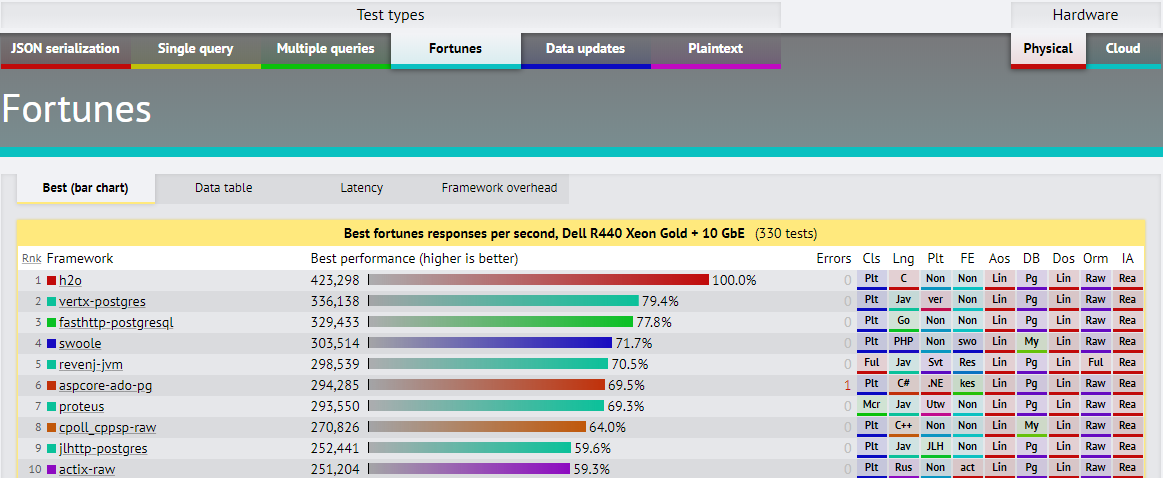

Running the TechEmpower benchmarks for all the web frameworks takes few days to finish. So I chose h2o as a reference web framework, and ran the benchmark with TechEmpower's code in GitHub, on AWS.

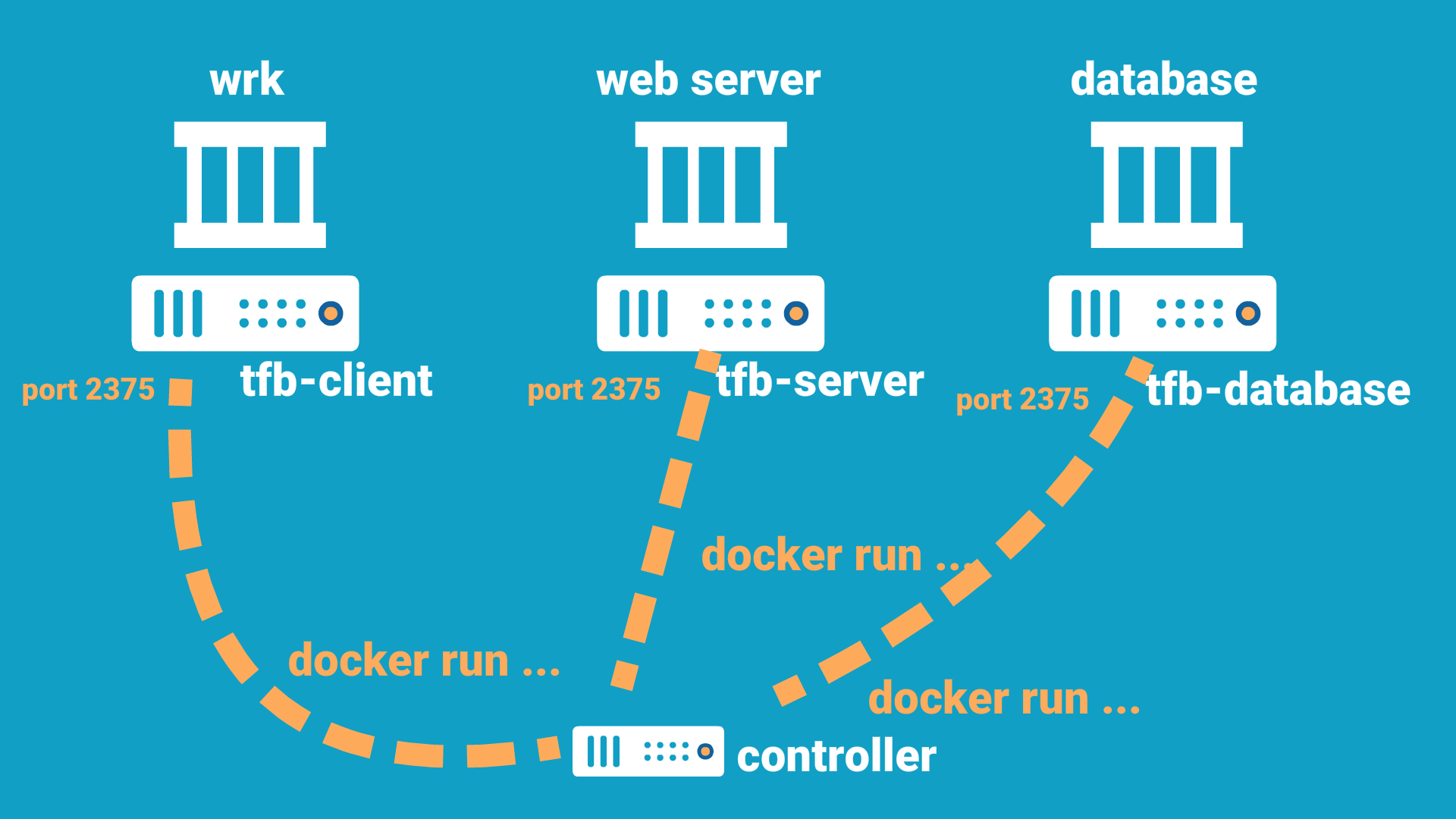

As you can see in Environment Details in the documentation, the official benchmark runs are on 3-machine setup:

All 3 machines: tfb-server, tfb-database, tfb-client

Therefore, I also set up my benchmark environment as 3-EC2 instance setup, one for the wrk container (HTTP request generator), another for the web server (h2o in my case) container, and the last for the database container.

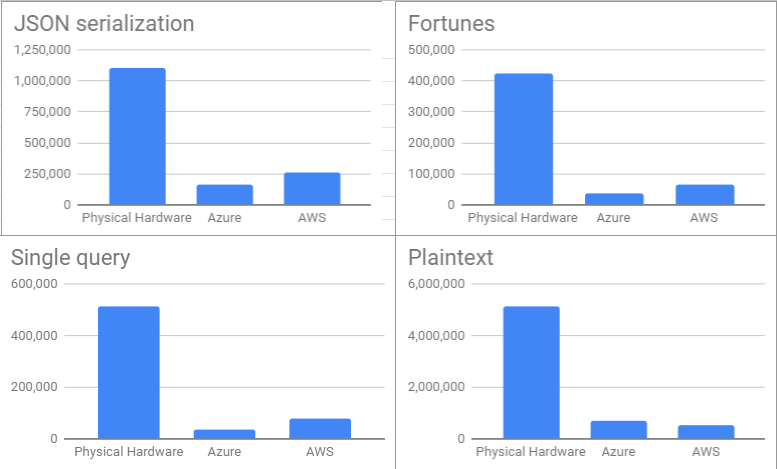

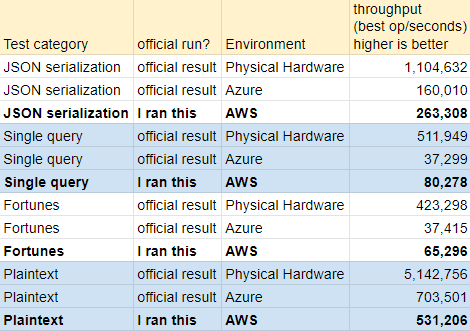

Here's the AWS results I got, compared with the official results by TechEmpower:

- Physical Hardware (official result): Self-hosted hardware called Citrine

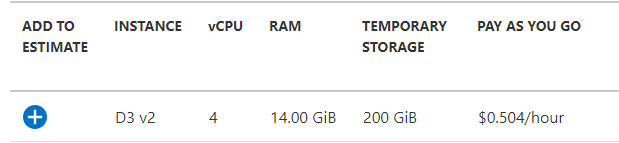

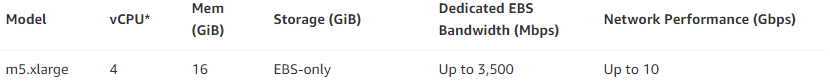

- Azure (official result): D3v2 instances

- AWS: m5.xlarge instances

Somehow I got weird results for Multi-query and Data-update tests, so I only listed up JSON serialization, Single query, Fortunes, and Plaintext.

The AWS results were noticeably different from Azure, but I didn't investigate the reason, because the specific results did not matter so much for me at this point.

What's more important for me was that, later, I could run benchmarks myself again and again, for a different set of environments, frameworks, or even custom benchmark scenarios, to get a better understanding of performance analysis.

Environment setup in more detail

(I added more detailed steps in the next article)

As I said containers, yes, everything is docker-ized already by the TechEmpower engineers. That's cool.

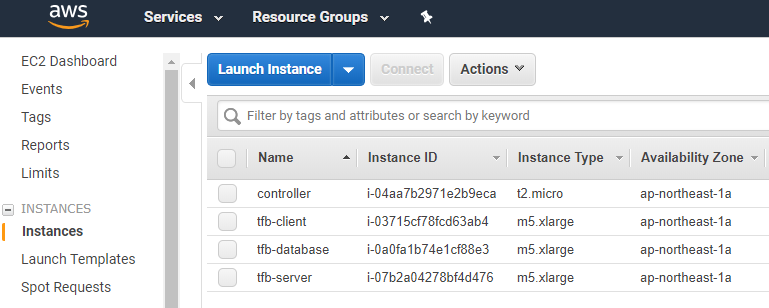

The wrk container was on one EC2 instance, the web server on another EC2, and the database on the other. However, there was actually one more t2.micro EC2 instance I used to docker run all the three containers remotely. So the actually EC2 setup was like below:

In terms of the cloud computing instances, the official environment setup description says Azure D3v2 was used.

Looking at AWS EC2 instance types, I think the closest match was m5.xlarge, with the same 4 vCPUs.

If you are familiar with AWS, before launching EC2, you should configure your VPC up to this point - Subnet, Security Groups, Route Table, etc. If you are not familiar with EC2 or VPC, please look at introductory materials. You can Google them up and there are a lot of them avaiable.

One thing to note for VPC is that the TechEmpower infrastructure expects the port 2375 is open so that docker containers can be launched remotely by docker run.

I also leveraged EC2 user data which is a quick and convenient way to start up docker on the EC2 instances and tweak the docker settings to open the port 2375.

Once everything is done, it is as simple as executing the following command within the git clone-ed directory of the TechEmpower GitHub repository on the "controller" EC2 instance.

./tfb --test h2o \

--network-mode host \

--server-host 10.0.0.207 \

--database-host 10.0.0.149 \

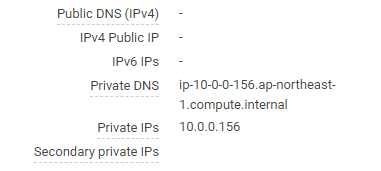

--client-host 10.0.0.216Replace the IP addresses above with the EC2 private IPs you get on the AWS web console.

(Again, more detailed instructions in the next article)

Caveats and Conclusion

Thanks to the great effort done by TechEmpower, I could run the same benchmark as theirs in a reasonable amount of time. I was pretty much an AWS beginner but could still execute it on my own.

However, I think there are few more possible improvements to make the the TechEmpower benchmark infra even better.

- Use Kubernets to run the containers in a declarative way, rather than procedural python scripts, for better maintenance

- Kubernetes can also get us avoid exposing the port 2375, to remotely execute

docker run. Better security. - The last point is that

-v /var/run/docker.sock:/var/run/docker.sockshould be avoided indocker rundue to its vulnerability. This is done to rundocker buildinsidedocker run, but if we push built Docker images to a registry, that's not needed. See below for more information. https://www.lvh.io/posts/dont-expose-the-docker-socket-not-even-to-a-container.html

To end the article, let me warn you that benchmarking is always an artificial activity, so pay attention to difference from the production environment, and if you do it wrong, it could even give opposite implications about the performance. Don't jump to conclusions, but keep improving on your performance anlaysis. Benchmarks are just good tools on that path, not the golden evidence you blindly rely on.